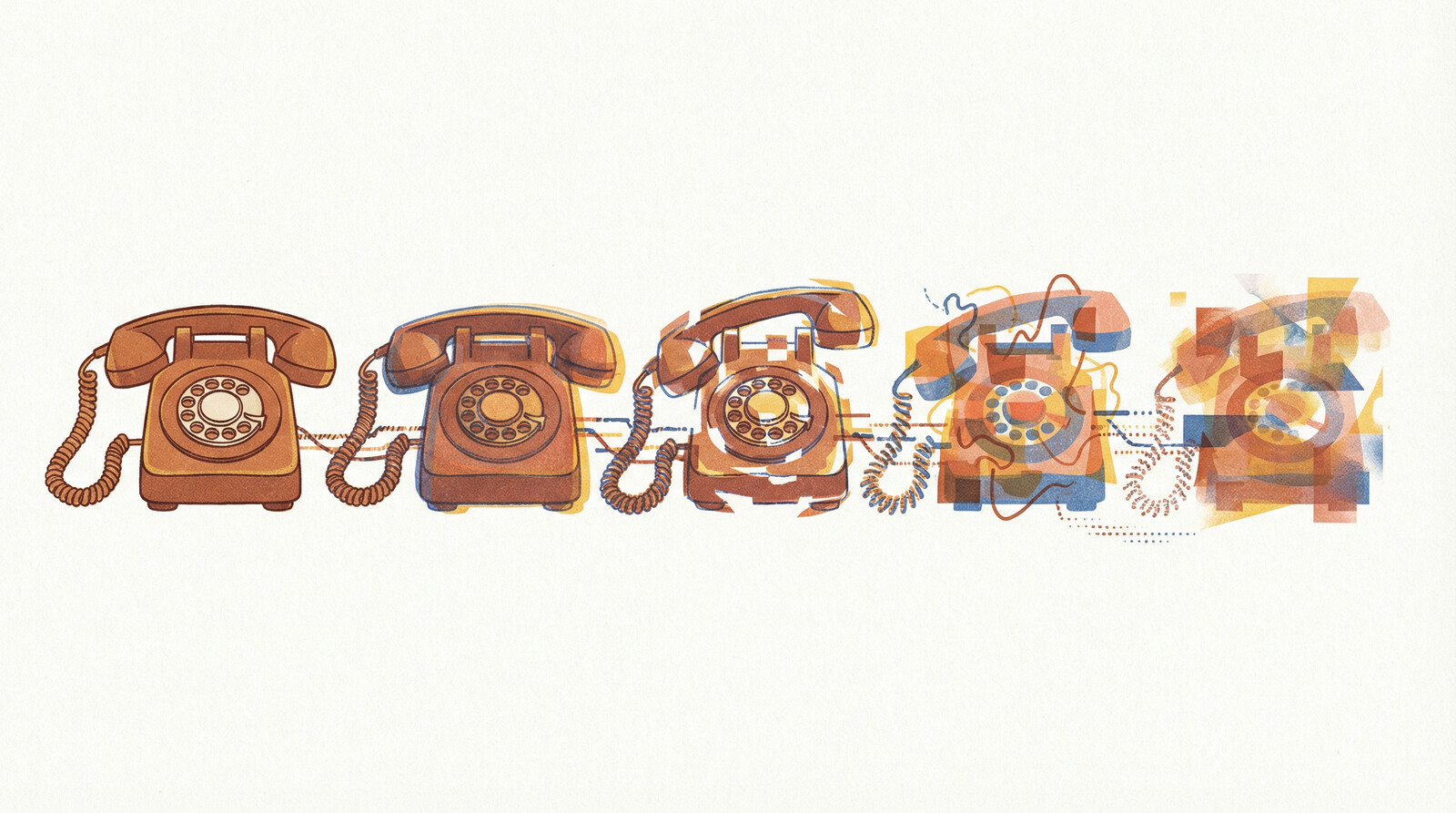

AI document systems process information in layers. Each layer discards something. By the time you get an answer, you’re several steps removed from what the document actually said.

This is context rot: the progressive loss of precision as information moves through processing pipelines.

The Three-Layer Problem

Most AI document systems work like this:

Layer 1: Extract text from PDFs. Tables become garbled. Scanned images miss text. Complex formatting collapses.

Layer 2: Chunk that text into smaller pieces for search. Discussions that span pages get split mid-sentence. Context lives in the chunk you didn’t retrieve.

Layer 3: Summarize or extract key points. Precision gets compressed into approximations.

Here’s what that looks like in practice:

Original lease clause: “Tenant shall be solely responsible for environmental contamination arising from operations during lease term (1998-2003).”

After summarization: “Lease agreement on file, environmental provisions included.”

The liability allocation clause you need for cost recovery is gone. Or consider regulatory correspondence:

Original: “Submit Phase II ESA by March 15, 2024 per AEP directive letter dated January 8.”

After summarization: “Regulatory compliance documentation required.”

You’ve lost the actionable deadline and the regulatory authority reference. When you’re conducting Phase II document review or preparing regulatory closure packages, these details determine whether you meet compliance requirements or miss filing deadlines.

Why Pre-Summarization Fails

Some systems pre-summarize documents during upload. This creates a fundamental problem: when you ask a question, the AI searches summaries, not source text.

If you ask “Who operated the facility in 1987?” and the summary says “Multiple operators managed the site over several decades,” you’ve lost the specific party and timeframe needed for EPEA Section 112 liability allocation. The information exists in the original document, but the system is searching a lossy copy.

Worse, you can’t know what you’ve missed, because the system never indicates it’s searching summaries instead of sources.

Source Fidelity Over Summarization

Statvis maintains direct access to source documents:

Structured extraction preserves tables, multi-page sections, and formatting context. When you retrieve a passage, you get the original text with metadata showing exactly where it appears: document name, page number, bounding box coordinates.

Page-level citations connect every claim to its source. If the system says “Approximately 5,000 gallons of diesel fuel released per incident report dated June 1998,” you can click through to the actual document page and verify the claim by reading the original text.

The information doesn’t degrade because it’s retrieved, not regenerated. This matters for institutional memory: site knowledge will be referenced for years, through personnel changes, litigation, and remediation. When someone asks “What was the spill volume?” in 2031, they need the same answer they’d get today—not a degraded approximation derived from layers of summarization.

Citations Prevent Compounding Error

Every Statvis response includes page-level citations. This serves as a structural safeguard: if the system claims something exists in your documents, you can verify it. If it misunderstands or misattributes, you’ll catch it when you check the source.

Consumer AI tools generate confident responses without citations. Without a way to verify, context rot becomes invisible: you don’t just lose precision, you lose the ability to detect that you’ve lost it.

Next time an AI gives you a factual claim about your documents, ask: “Can you show me exactly where that appears in the source?” If the answer is a page number and a highlighted passage, you’re working with a retrieval system. If the answer is “based on my analysis,” you’re working with summaries that degrade with each processing step.